For software engineers working with transactional systems, managing data imports and exports is unusual part of the daily routine. Sometimes it happens: your app gains traction, enterprise clients start showing up—and they’re bringing their biggest problem with them: their datasets. Suddenly, you’re not just handling user interactions but massive data loads that push your service to its limits. Memory spikes, sluggish performance, and even crashes threaten to derail your system. At this point, you realize: a simple file upload can be a ticking time bomb, only if you parse it all at once.

The Challenge

Transactional systems are designed for real-time user actions—quick reads, writes, and updates that keep data flowing smoothly. But enterprises frequently need to import or export huge datasets for analytics, reporting, or data syncs. Wheter it’s a one-time migration or a regular data feed, these large imports can overwhelm the system, causing memory bloat, slowdowns, and even crashes.

Loading large files into memory all at once would have been risky, potentially maxing out RAM and impacting the service. With this in mind, I recommend: when dealing with large data imports, always consider streaming from the beginning.

Why Start with Streaming?

If you’re handling large datasets, streaming isn’t just an optimization—it’s a foundational decision. You don’t want users to feel the impact of a large import, so it’s crucial to keep the system responsive and stable. Streaming allows you to process data incrementally, keeping memory usage low and stable, and preventing crashes or slowdowns. This approach is especially critical for transactional systems, where real-time operations must continue uninterrupted, while large imports are processed in the background.

How to Stream Data Efficiently

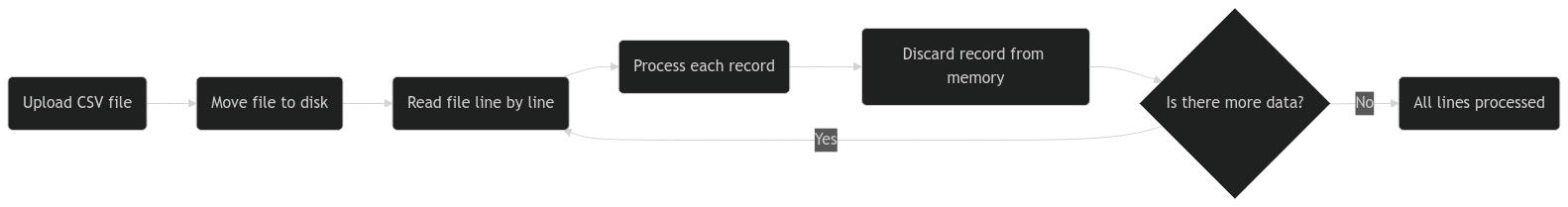

Efficient streaming involves breaking down large datasets into manageable chunks and processing them incrementally. But here’s the critical part: first, move the large file directly to disk instead of loading it into memory. Then, read it line by line (or in small sections), building each record individually, and discard it from memory once it’s processed or queued. If necessary, send each record immediately to a message queue for deferred processing (more on that later). This way, memory usage stays low and stable, allowing the system to handle massive imports smoothly without risk of overload.

Here’s a simplified example in Node.js with Express and Multer, handling a large csv file upload:

const express = require('express');

const multer = require('multer');

const fs = require('fs');

const readline = require('readline');

const processRecord = (record) => {

// Process each record here

};

const app = express();

const upload = multer({ dest: 'uploads/' });

app.post('/upload', upload.single('file'), (req, res) => {

const file = req.file;

const readStream = fs.createFileStream(file.path, { encoding: 'utf8' });

const stream = readline.createInterface({

input: readStream,

crlfDelay: Infinity

});

stream.on('line', (line) => {

// Process each line of the CSV file here

const [name, age, gender] = line.split(',');

const record = { name, age, gender };

processRecord(record);

});

stream.on('close', () => {

// All lines have been processed

console.log('CSV file processing complete');

});

res.send('File uploaded successfully');

});In this example, we use Multer to handle file uploads, moving the file directly to disk. We then read the file line by line, processing each record individually and sending it to a message queue for deferred processing.

Managing Database Load with Message Queues

While streaming optimizes memory use, inserting data directly into an OLTP (Online Transaction Processing) database can still cause performance issues. Sudden, heavy insert loads can slow down the system, especially since indexing demands spike during bulk inserts. A message queue broker provides a powerful solution here:

-

Accept Data Fast, Process Later: The message queue acts as a temporary holder, allowing us to accept data instantly and process it incrementally. This separation between data acceptance and processing avoids bottlenecks and keeps the database load manageable.

-

Resilience Against Data Loss: Capturing data in a queue prevents loss and allows flexible processing schedules, so even during high-traffic times, we avoid overload. In this case, data arrives safely, and we handle it when resources are available.

-

Maintain High Availability: Deferred processing means users experience seamless service. We prevent any spikes from heavy inserts, so the system remains responsive and reliable.

Conclusion

In my experience, combining streaming with message queue management in transactional systems is one of the most effective ways to handle large imports. Starting with streaming from the moment a user uploads a file keeps memory under control, prevents crashes, and ensures smooth operations—even with huge datasets. This foundational approach is a simple choice that makes a big difference for scalability and performance, providing peace of mind from the start.

While a message queue can help manage processing load by maintaining database stability and supporting high availability, it’s not always essential if data doesn’t require indexing in an OLTP database. The key takeaway? Begin with streaming. If your data import needs evolve, a message queue can be added later as needed. This approach is versatile, resource-efficient, and ideal for enterprise environments balancing real-time transactions with bulk data operations.